I recently moved to an area with an excellent local flea market, which has, over the last few months, given me an opportunity to answer some questions that have been floating around in my head for a few years.

Those questions led me here.

So

I have a love-hate relationship with Thunderbolt. Last time, I wrote a rant about my failed attempts to build a home server using this cursed technology.

Apparently, I forgot to untick the “unlist” button I have set up in WordPress back then?? So almost two months after posting that article, the only people who read it are those I directly sent it to?? Whoops.

I remain convinced that Thunderbolt is an technology completely unsuited to practical, serious workloads. However, that definition does not extend to absurd setups which are more art than work…

The Instigator

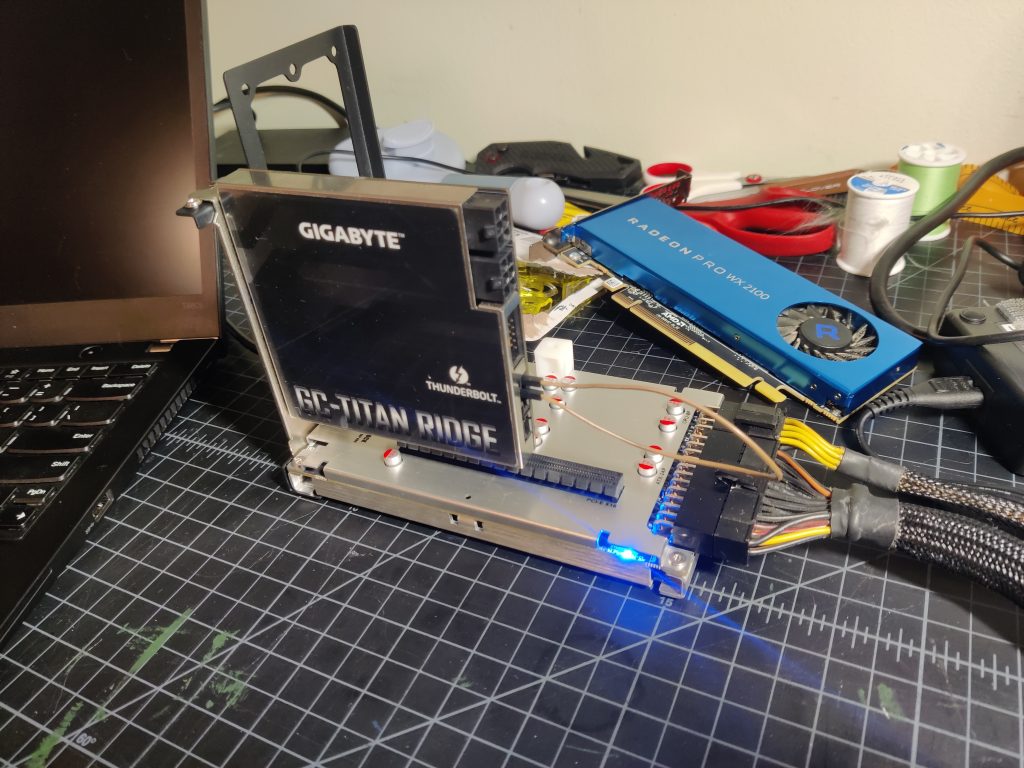

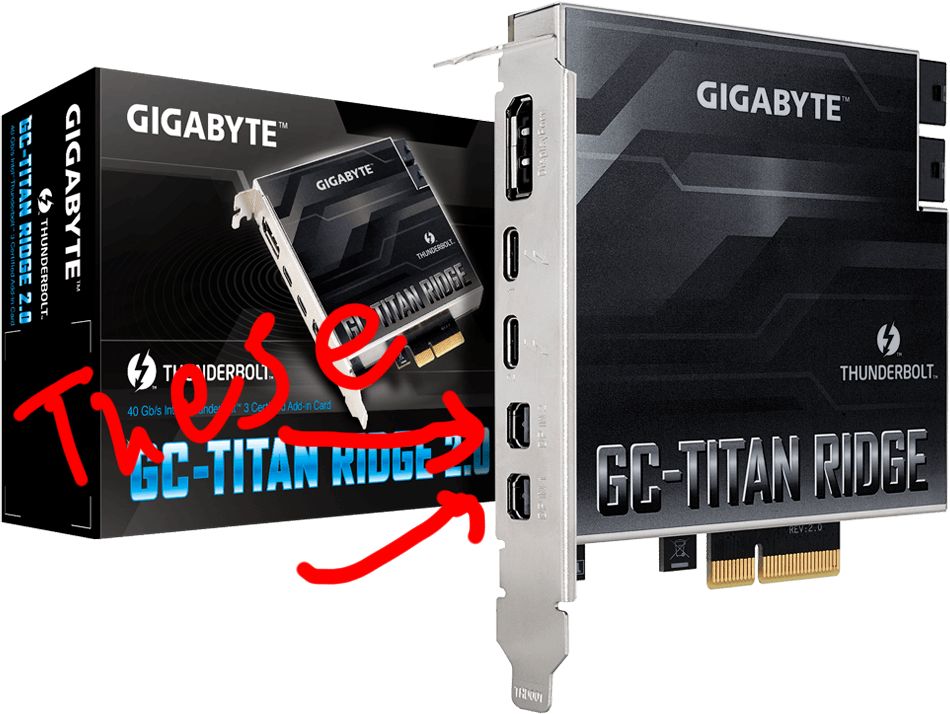

When I was still a Thunderbolt believer in 2021, I purchased this PCIe Add-In Card.

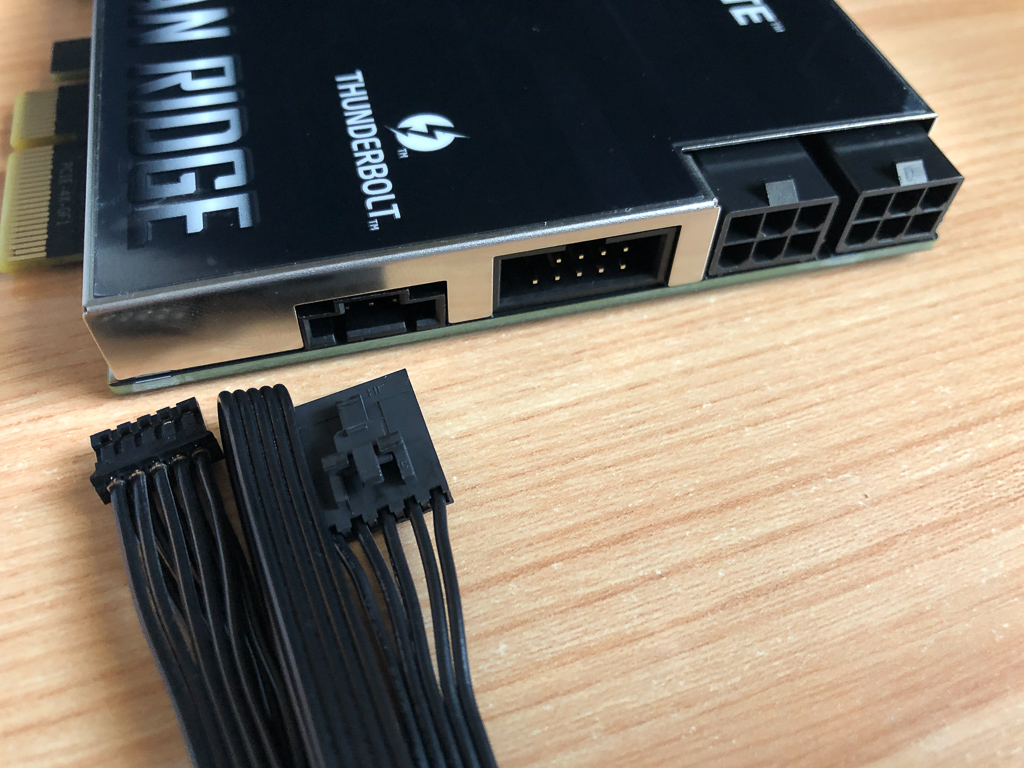

The Titan Ridge is a Thunderbolt controller card that connects over a PCIe 3.0 x4 link. Once plugged in, this card offers two Thunderbolt 3 ports. The back of the card has a few more connectors, all of which the instruction manual states must be attached to operate:

Right to left:

- 2x PCIe 6-pin power connectors. The card advertises 100W USB-C Power Delivery, but I’ve never actually seen this work.

- A USB 2.0 internal header, intended to connect to the motherboard.

- A secret-sauce, proprietary 5-pin header intended to connect to a special Thunderbolt header on Gigabyte motherboards. Every major vendor sells their own add-in card with their own secret sauce header and a matching header on their motherboard. The reason for this eludes me.

More Thunderbolt talk

There’s something else we need to discuss about Thunderbolt before proceeding to the question. As I mentioned in the last article, Thunderbolt is a physical connection layer that lets one tunnel PCIe traffic over a USB-C cable. The speed of Thunderbolt 3/Thunderbolt 4 is PCIe 3.0 x4.

Certain (pricey!) adapters recognize this capability and the flexibility of PCIe to expose an outright PCIe connector at the end of a Thunderbolt connection. This can range from a simple adapter for NVMe SSDs (which are also just PCIe devices, in a different connector) to outright PCIe slots like those found on motherboards. This is the backbone of the “external GPU” or “eGPU” concept– the promise that PCIe can be had via an easily accessible connector which would allow one to connect a full-size desktop GPU to their laptop. There’s a whole forum dedicated to ecking the most performance possible out of eGPUs over at egpu.io.

There are three problems with eGPUs that prevent them from going mainstream:

- The chips necessary to expose a PCIe connector are extremely expensive and uncommon. The few domestic manufacturers like Razer who made complete eGPU enclosures sold them for north of $300, while if you’re willing to order sketchy devices off AliExpress the price historically went down to “only” $150.

- Thunderbolt incurs a very large practical performance penalty over a normal PCIe connection. Todays graphics cards require PCIe 3.0 x16 to reach their maximum performance. A PCIe 3.0 x4 link is roughly equal to a PCIe 1.1 x16 link (PCIe speeds double with each generation.) An RTX 4090 loses roughly 20% of its performance over PCIe 1.1 x16, which is indeed the practical performance loss when one is run over Thunderbolt 3.

- Thunderbolt is a mess of a protocol that is not useful for serious workloads. Even if it was, connecting and disconnecting a GPU from a computer while turned on (“hot-plugging”) doesn’t really work today and usually just crashes.

Now we have all the necessary info (and fun facts) necessary to pose the question:

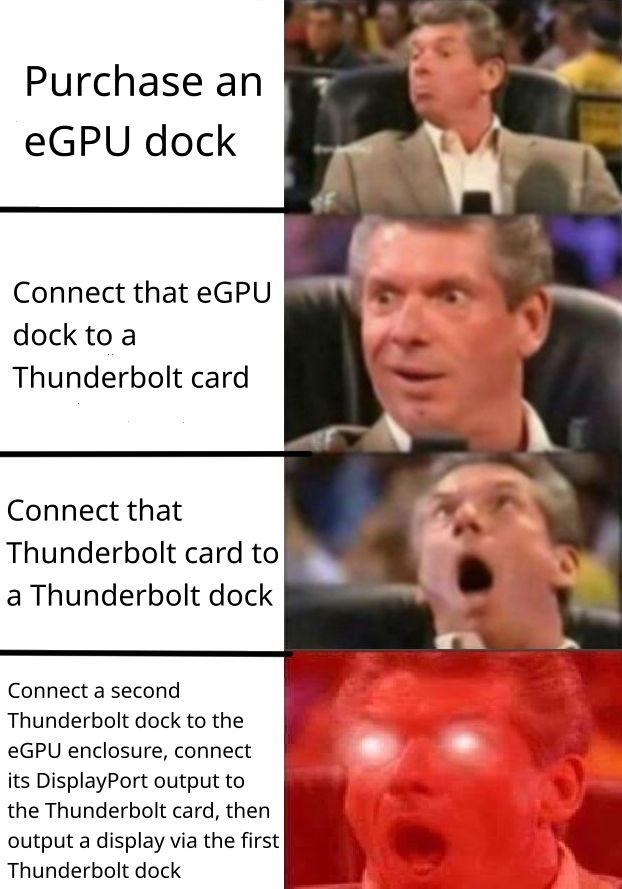

What happens if I plug my Thunderbolt Add-In Card to an eGPU connector?

The Titan Ridge card creates a Thunderbolt 3 link given a PCIe 3.0 x4 interface. An eGPU connector provides a PCIe 3.0 x4 interface given a Thunderbolt 3 link. Could it be possible to drive one off the other in a demented daisy-chain? Could I create a Thunderbolt-over-Thunderbolt connection?

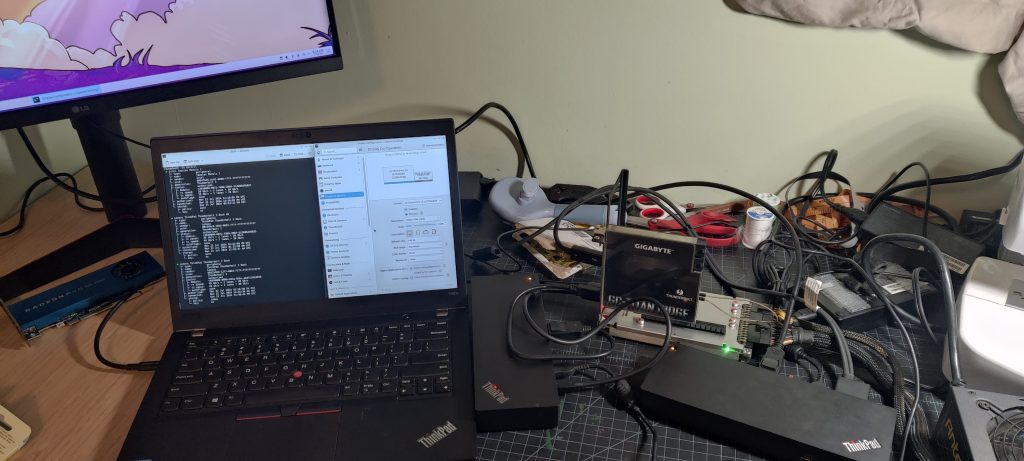

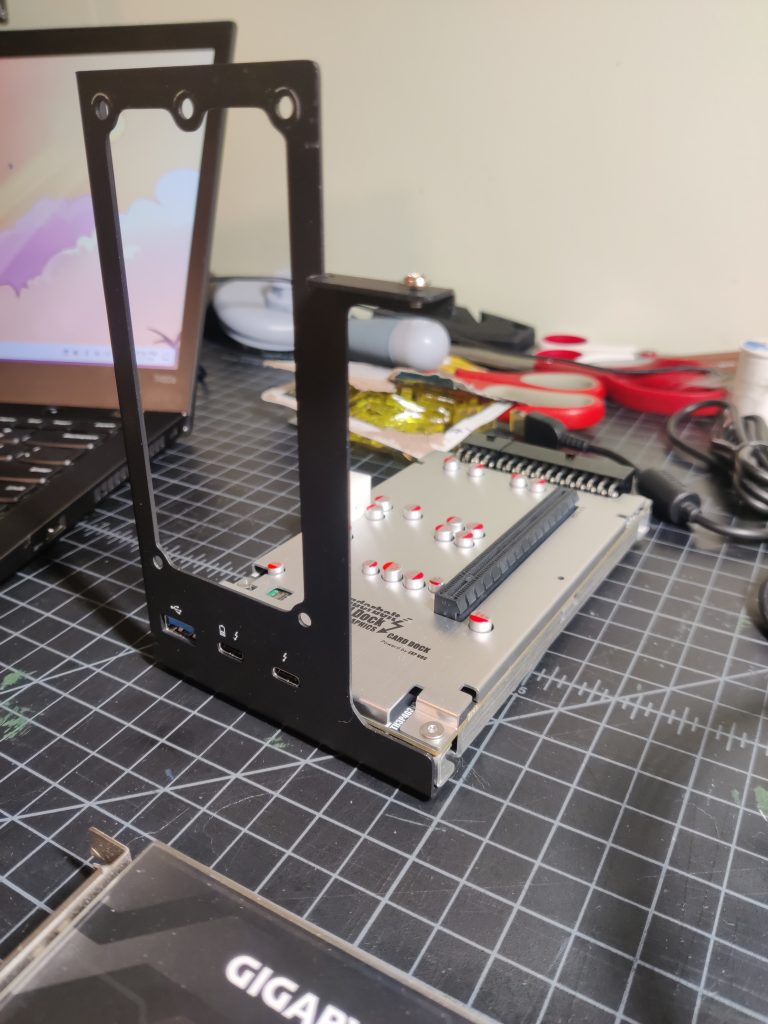

This past weekend, I was finally able to answer this question thanks to the monthly flea market. An individual tabling sold me a barebones eGPU enclosure for $80.

The enclosure requires an ATX power supply to operate, so I ran over to another booth and picked up a spare one for $10. Then I got home and the magic began.

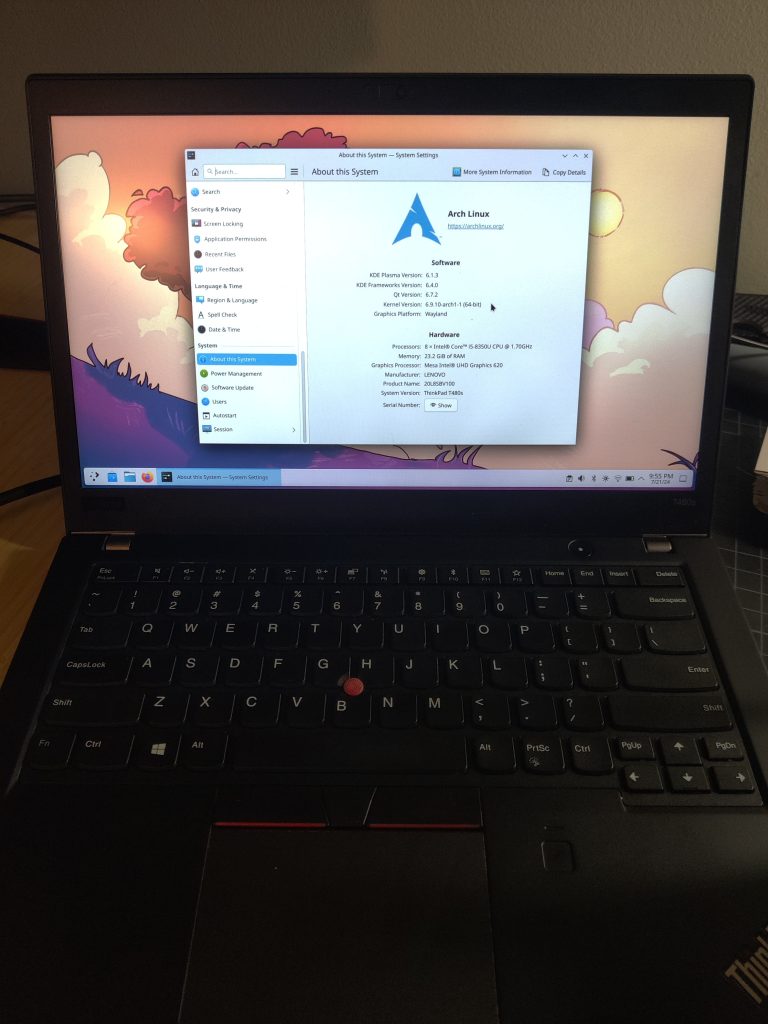

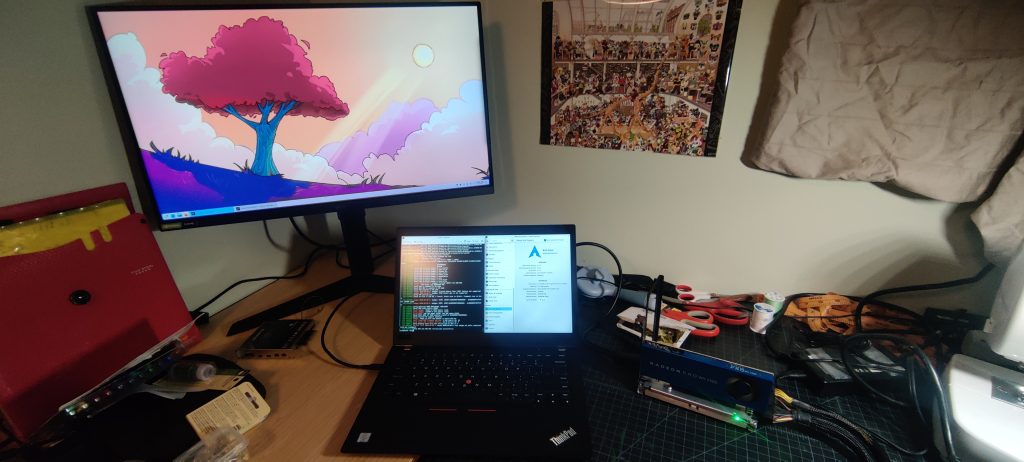

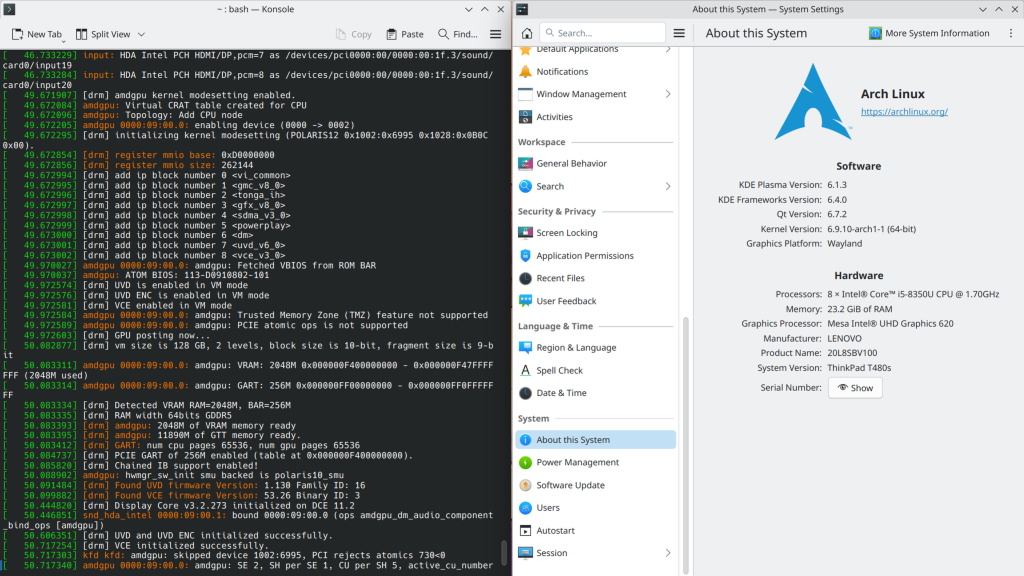

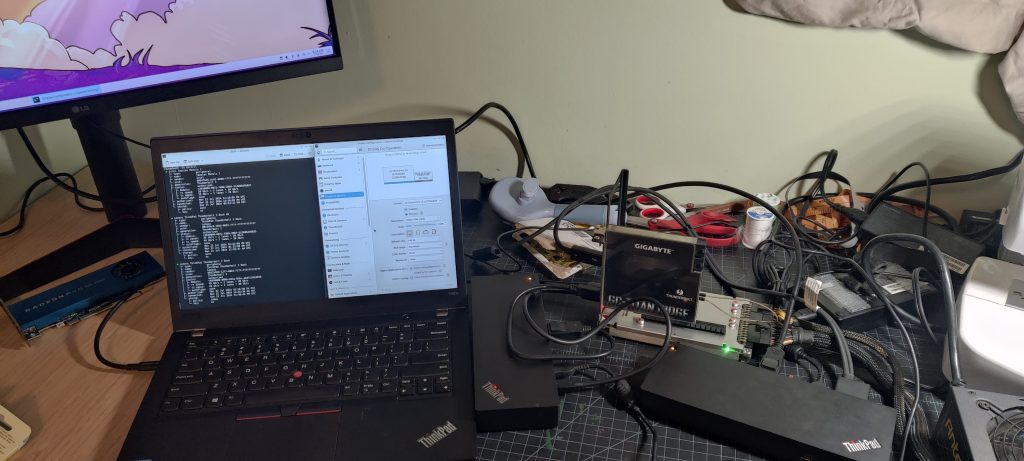

My victim machine was a ThinkPad T480s, purchased the same day to replace another laptop whose soldered RAM had failed the previous week.

A sanity check was in order first to ensure that the enclosure worked. I plugged in a spare GPU, an AMD Radeon Pro WX 2100. Sure enough, the GPU came up and output to a display, no problem!

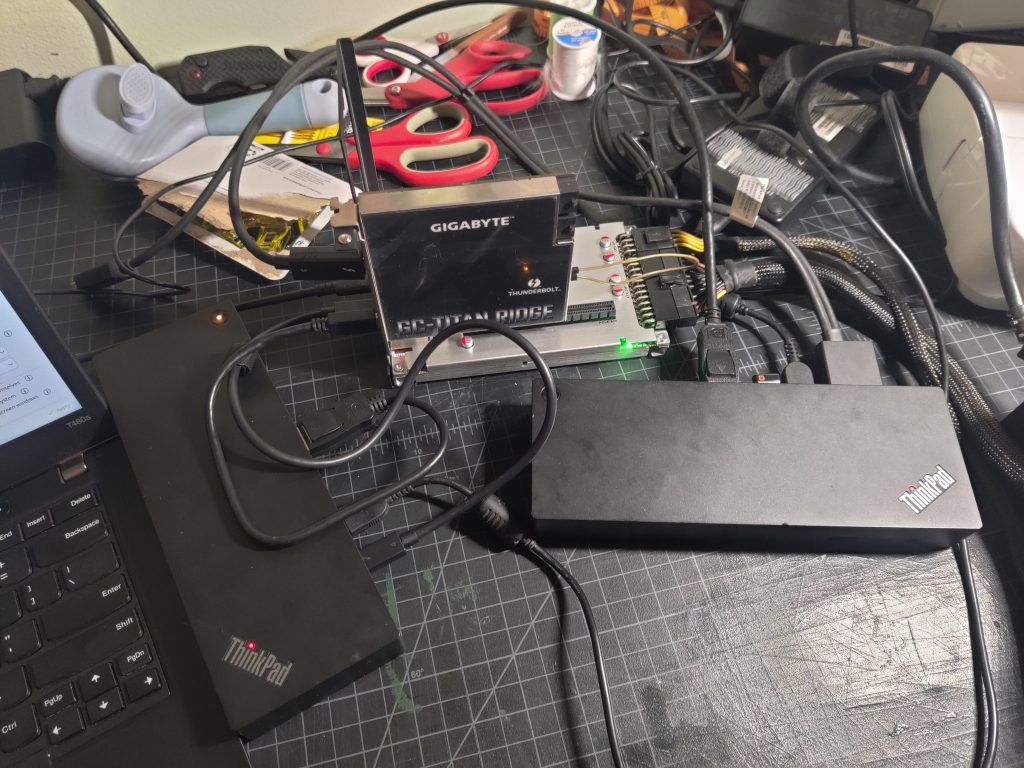

Moving on, could I get the Titan Ridge card to work? What about that special connector we need to attach to the motherboard?

Turns out the connector is completely unnecessary for the card to operate correctly. Years ago, someone posted a very simple solution: Just bridge pins 3 and 5 of the connector, and it’ll work in any motherboard.

I wired up the 6-pin PCIe power connectors, but didn’t have the cables necessary to connect the USB headers (I would have needed something like this.) Might as well try anyway, right…?

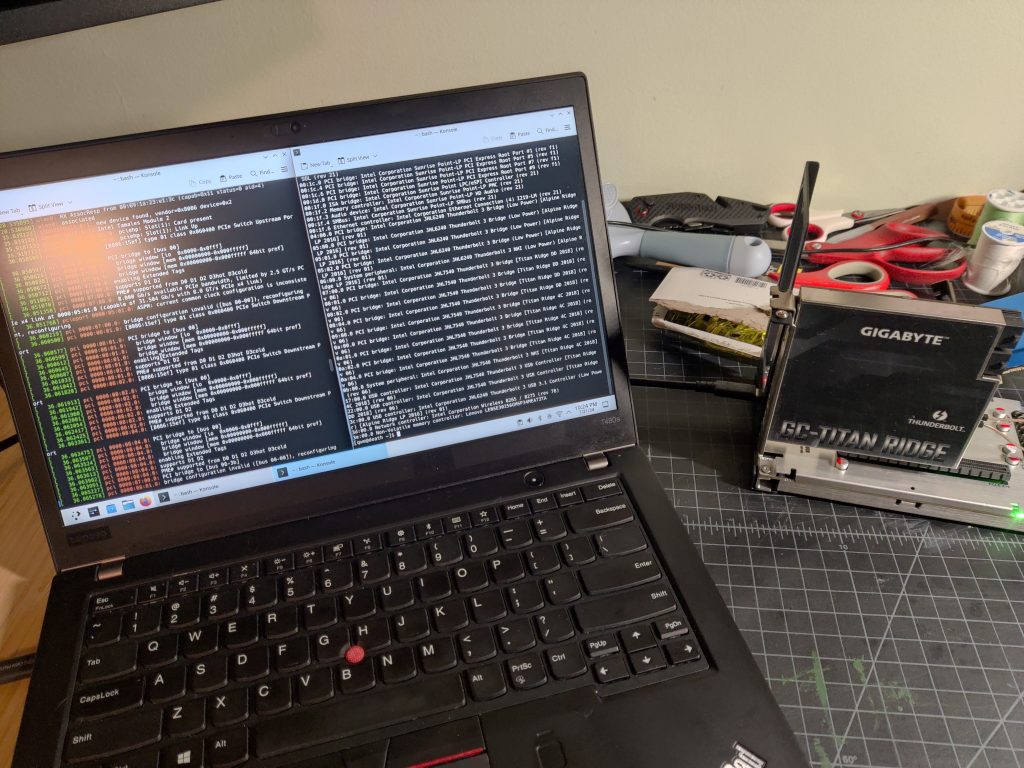

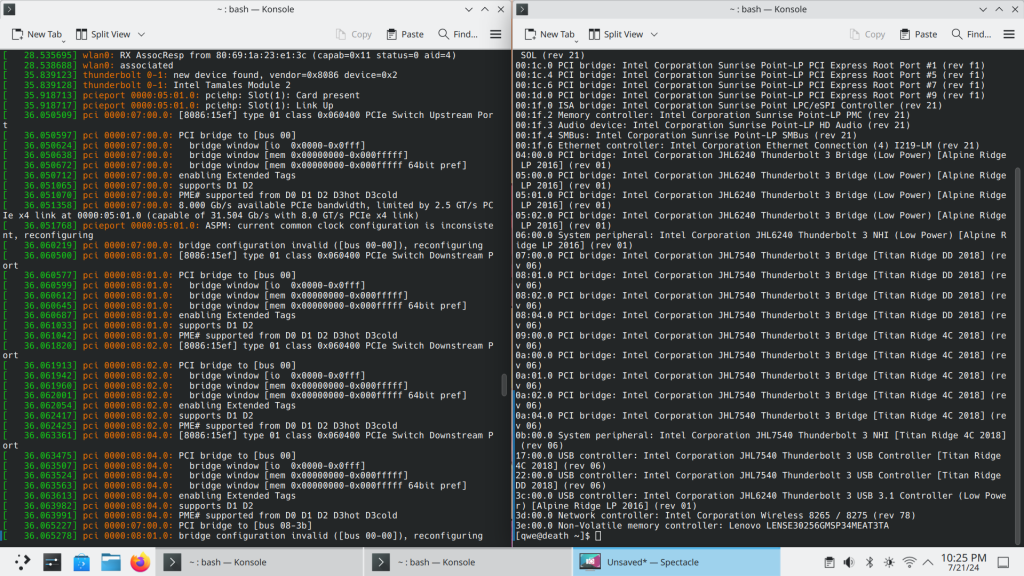

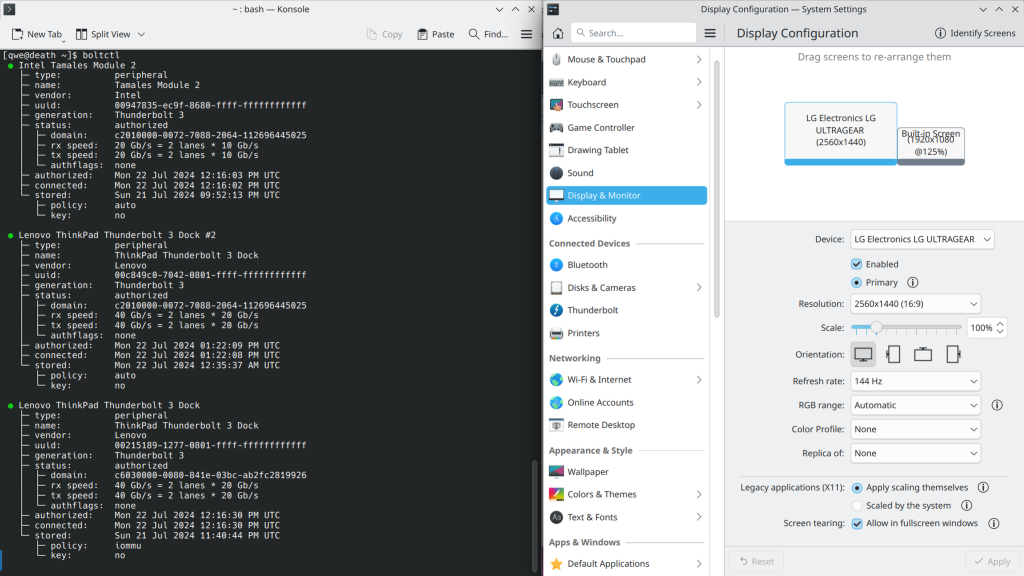

It just worked. dmesg showed a very lengthy bringup process and lspci showed the controller fully present and online.

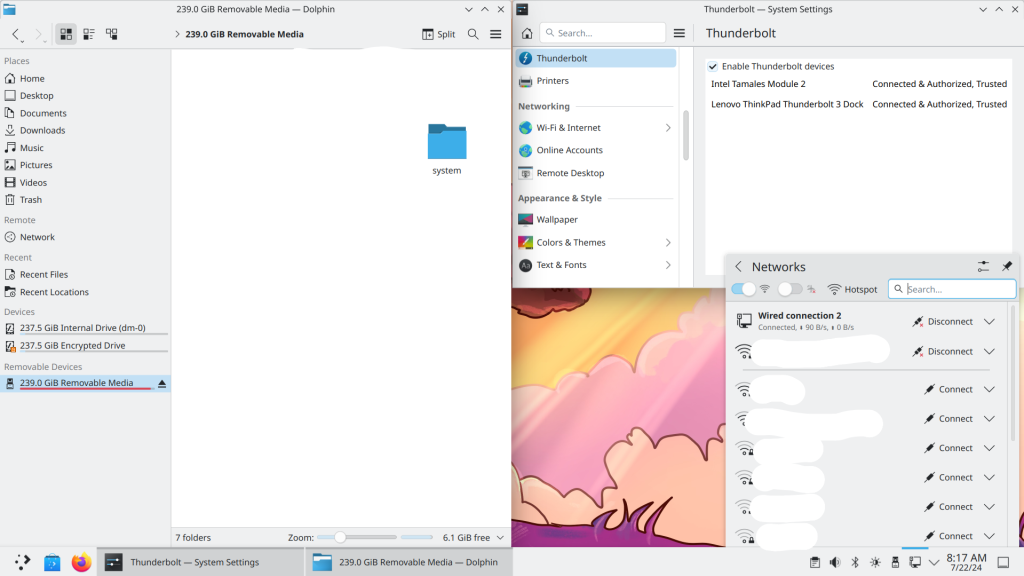

I quickly grabbed a ThinkPad Thunderbolt dock and plugged in some devices, then attached the dock to the card.

It worked. It just worked. I couldn’t believe it. I almost choked myself with laughter. Not a single piece of debugging was necessary, just a lot of checking cables and rebooting. I had no clue how this unholy contraption wasn’t catching fire, let alone functioning correctly, but it was.

I’m guessing those USB headers were to facilitate plugging USB-C devices directly in to the port; when I tried to directly attach a flash drive, nothing happened.

What about display output?

The primary use case for Thunderbolt is laptop docks. On top of PCIe traffic, Thunderbolt can transmit USB and DisplayPort traffic at the same time, muxing each data stream together. Thunderbolt controllers integrated into laptops have a DisplayPort output from the GPU routed into them, but the Titan Ridge card doesn’t have that luxury, being a “normal” PCIe device. It therefore provides two mini-PCIe input ports, one for each Thunderbolt port, and some mini-DP to DP cables to plug in to your GPU.

In order to get display output working under this card, we’ll need to get a DisplayPort connection to these ports. I started with a cheap, $20 USB-C to DisplayPort adapter I had lying around.

This seemed to work, but only at 1080p@60hz. My display is 1440p@144hz, and this setup only got a black screen with occasional flickers at that resolution. Bad connection? Crummy adapter? Either way, a better idea presented itself with two facts:

- I had purchased a second ThinkPad Thunderbolt dock from the flea market a few months back.

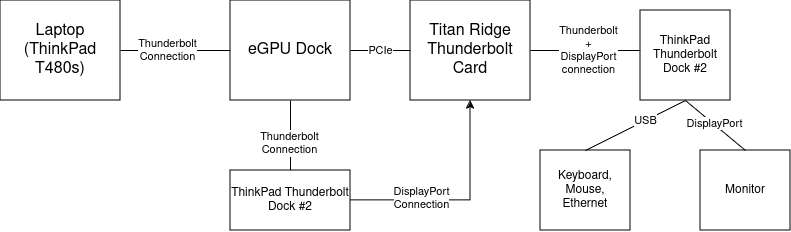

- The ThinkPad T480s I was driving this off of only had one Thunderbolt 3 port… But the PCIe enclosure had a downstream Thunderbolt port.

So, I could take the second Thunderbolt dock, attach it to the PCIe enclosure, and use its DisplayPort output to reroute to the Titan Ridge display input, which would then drive a display over the first Thunderbolt dock all the way to my monitor.

Here’s a delusional diagram of this demented setup:

And yes, it works.

What else?

As I write this, I’m still shocked that instead of opening a portal to a Lovecraftian horror dimension, this absurd setup just worked as expected. What more is there to say?

Well, we could try going in the other direction.

What happens when you attach an eGPU enclosure to a Thunderbolt card?

The answer to this is much closer to what I was expecting.

I attempted this setup with two motherboards- an old Gigabyte GA-Z170P-SLI with an Intel i5-6500 in it, and my daily driver, the Gigabyte X570 Aorus Pro with a Ryzen 9 3950X.

The Intel board did not cooperate with the Thunderbolt card at all. Despite having a Thunderbolt header, the card refused to start unless I bridged the header as shown earlier. It then displayed a variety of symptoms, most often doing nothing but occasionally crashing or failing to POST. Eventually the behavior settled to “it crashes on hotplugging the enclosure, but if you try to boot with it plugged in the PCIe bus goes out to lunch”:

The X570 was the board I purchased this Thunderbolt card for originally. I daily drove it for a few years to use a Thunderbolt dock. I was able to bring up an NVMe drive with the enclosure attached without too much trouble (only a handful or reboots and kernel command-line changes– about par for the course with Thunderbolt) but my AMD GPU stubbornly refused to boot. Checking the dmesg logs, this appeared about 30 seconds after power on:

Nov 27 12:23:32 a kernel: [drm:amdgpu_bo_init [amdgpu]] *ERROR* Unable to set WC memtype for the aperture base

Nov 27 12:23:32 a kernel: [drm:amdgpu_device_init.cold [amdgpu]] *ERROR* sw_init of IP block <gmc_v10_0> failed -22This led me to this open bug report, which pointed out that, as I said earlier, that hot-plugging GPUs generally doesn’t work. Clearly my GPU was attempting to attach after boot and therefore failing.

However, this motherboard has full awareness of Thunderbolt including BIOS options for it. By settings the “USB Type-C with Titan Ridge Boot Support” to enabled, I got the BIOS to detect the Thunderbolt-attached PCIe device on boot, and it came up successfully.

So, not nearly as instantly functional as the other way around, and it only really works with help from the BIOS. But hey, I did get it working!

Conclusion

Certain people in my life continuously tell me that I’m cursed. I think they’re right– I can’t reliably use a computer without it breaking in some fashion or another.

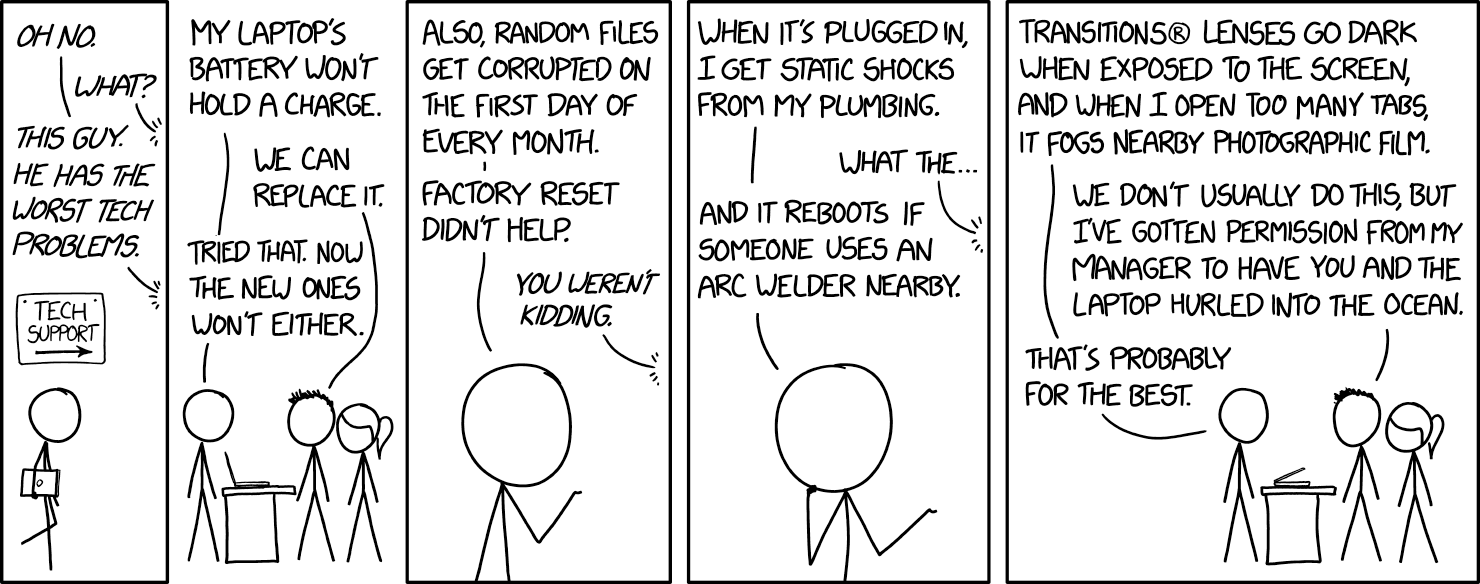

Alternatively, https://xkcd.com/1316/

The nature of the curse, at least this year, seems to be “you cannot use a computer without it breaking, but you can construct Rube Goldberg machines worth over $900 at MSRP to force signals to travel further than reasonable and it’ll just work”.

I wonder what would happen if I bought another eGPU enclosure and continued the chain further.

Leave a Reply